- El Capitan is a classified US government property that crunches data related to US nuclear arsenal

- ServeTheHome’s Patrick Kennedy was invited for the launch at the LLNL in California

- AMD and HPE’s CEOs were also part of the ceremony

In November 2024, the AMD-powered El Capitan officially became the world’s fastest supercomputer, delivering a peak performance of 2.7 exaflops and 1.7 exaflops of sustained performance.

Built by HPE for the National Nuclear Security Administration (NNSA) at the Lawrence Livermore National Laboratory (LLNL) to simulate nuclear weapons tests, it is powered by AMD Instinct MI300A APUs and dethroned the previous leader, Frontier, pushing it down to second place among the most powerful supercomputers in the world.

Patrick Kennedy from ServeTheHome was recently invited to the launch event at LLNL in California, which also included the CEOs of AMD and HPE, and was allowed to bring along his phone to capture “some shots before El Capitan gets to its classified mission.”

Not the biggest

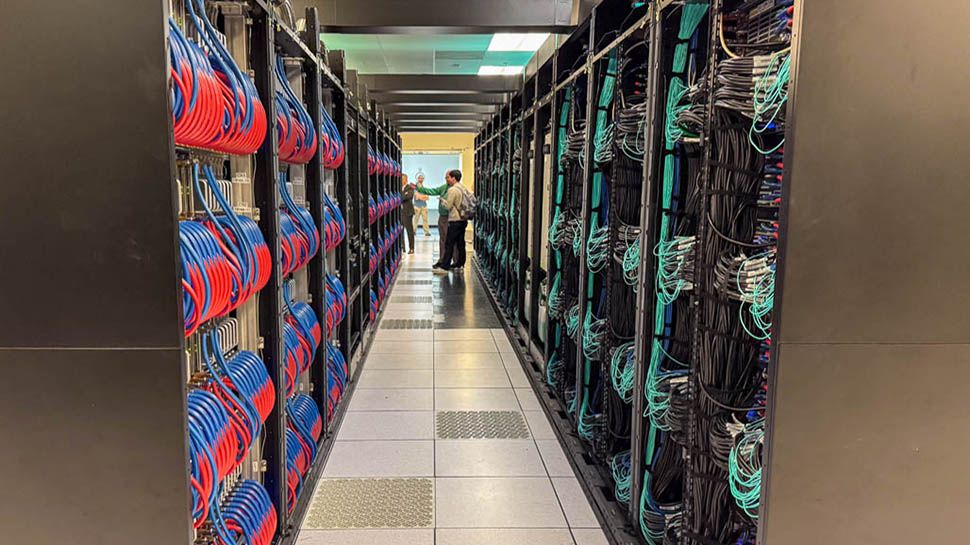

During the tour, Kennedy observed, “Each rack has 128 compute blades that are completely liquid-cooled. It was very quiet on this system, with more noise coming from the storage and other systems on the floor.”

He then noted, “On the other side of the racks, we have the HPE Slingshot interconnect cabled with both DACs and optics.”

The Slingshot interconnect side of El Capitan is – as you’d expect – liquid-cooled, with switch trays occupying only the bottom half of the space. LLNL explained to Kennedy that their codes don’t require full population, leaving the top half for the “Rabbit,” a liquid-cooled unit housing 18 NVMe SSDs.

Looking inside the system, Kennedy saw “a CPU that looks like an AMD EPYC 7003 Milan part, which feels about right given the AMD MI300A’s generation. Unlike the APU, the Rabbit’s CPU had DIMMs and what looks like DDR4 memory that is liquid-cooled. Like the standard blades, everything is liquid-cooled, so there are not any fans in the system.”

While El Capitan is less than half the size the xAI Colossus cluster was in September when Elon Musk’s supercomputer was equipped with “just” 100,000 Nvidia H100 GPUs (plans are afoot to expand it to a million GPUs), Kennedy points out that “systems like this are still huge and are done on a fraction of the budget of a 100,000 plus GPU system.”