64% of Surveyed Whitehats Find ChatGPT Lacks Accuracy in Identifying Security Vulnerabilities – Immunefi

While most surveyed whitehats used ChatGPT in Web3 security and found it has potential, they’ve noted concerns around its ability to identify security vulnerabilities, according to a report by the bug bounty and security services platform Immunefi.

OpenAI released its artificial intelligence chatbot ChatGPT in November 2022. Within two months, it reached 100 million users – but as its user base grew so did concerns about security, privacy, and ethics.

165 of the most active whitehats in the Web3 security community participated in Immunefi’s survey conducted in May 2023, the platform told Cryptonews. The newly released ‘ChatGPT Security Report’ was built in June.

The adoption of ChatGPT among whitehats is relatively high, the report found. 76.4% of the respondents used the technology, while 36.7% incorporated it into their daily workflow.

As for use cases, 74% said that the novel technology is best suited for educational purposes.

Notably, the report stated that,

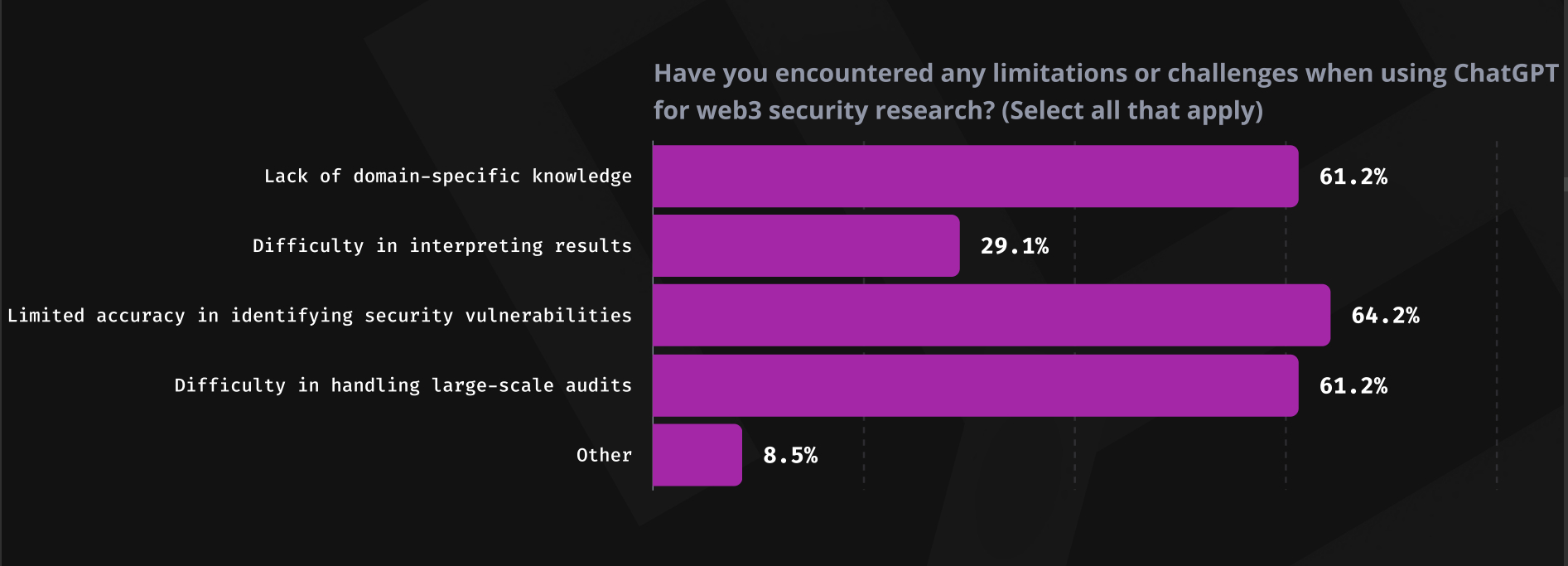

“While smart contract auditing [60.6%] and vulnerability discovery [46.7%] are regarded by the community as particular use cases, in turn, the most commonly cited concerns were limited accuracy in identifying security vulnerabilities [64.2%], lack of domain-specific knowledge [61.2%], and difficulty in handling large-scale audits [61.2%].”

Per the report, 76.4% of the whitehats reported using ChatGPT for security practices.

Rating their level of confidence in ChatGPT’s ability to identify Web3 security vulnerabilities, 35% said they were moderately confident, 29% were somewhat confident, and 26% were not confident.

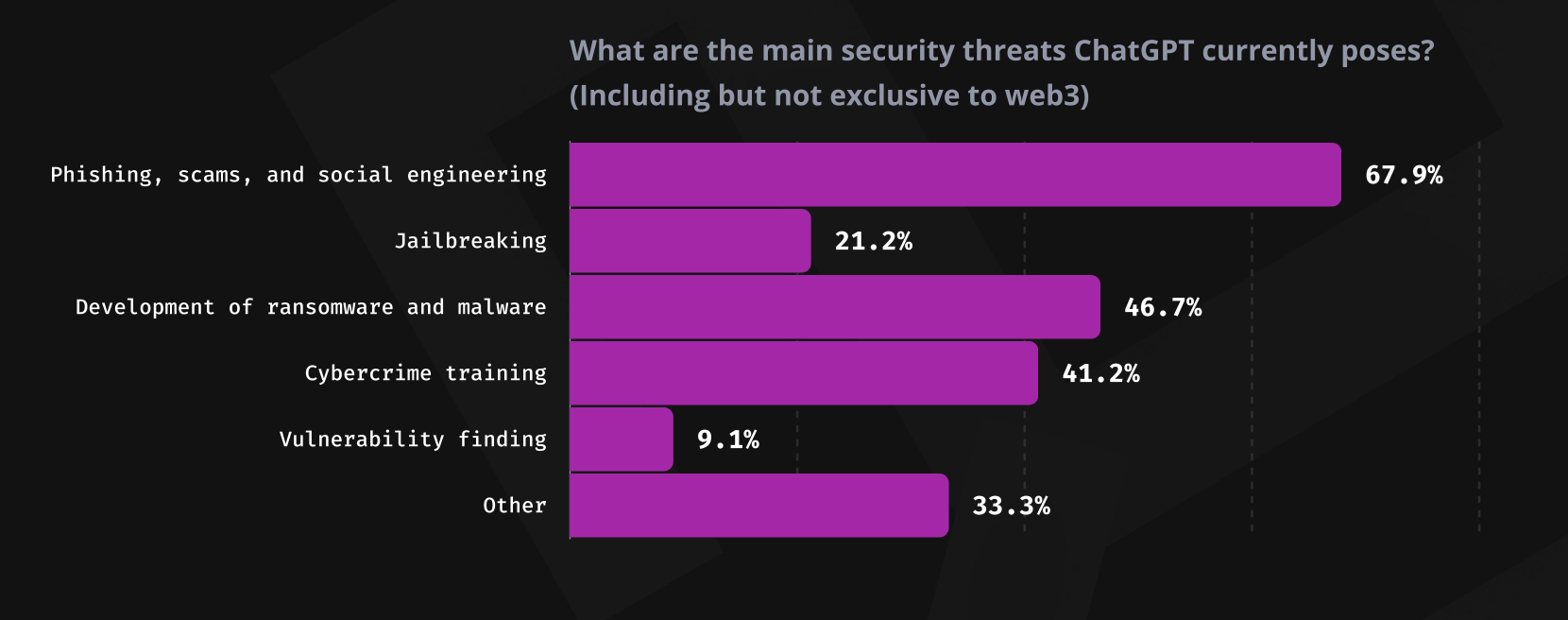

52% said that the general use of ChatGPT presents security concerns, including phishing, scams, social engineering, and ransomware and malware development.

The majority, or 75%, said that ChatGPT has the potential to improve Web3 security research, but that further fine-tuning and training is necessary.

That said, most (68%) would recommend ChatGPT to colleagues as a tool for Web3 security.

ChatGPT-generated Bug Reports are Akin to Spam

Immunefi says it’s protecting over $60 billion in user funds; it paid out over $75 million in total bounties and saved over $25 billion in user funds. It still offers over $154 million in available bounty rewards.

After ChatGPT was released, the platform started receiving “a flood” of bug reports – and while well-written, the underlying claims were “nonsensical,” referring to nonexisting functions or confusing key programming concepts.

“To date, not a single real vulnerability has been discovered through a ChatGPT-generated bug report submitted via Immunefi.”

Therefore, these reports amounted to spam, said Immunefi. They were submitted by individuals without security skills “hoping that web3 bug bounty hunting would be as easy as entering in some ChatGPT prompts.”

Immunefi implemented a new rule to permanently ban accounts submitting ChatGPT-generated reports.

Notably, 21% of accounts banned from Immunefi were for submitting this type of bug report.

____

Learn more:

– LayerZero and Immunefi Announce Record $15 Million Crypto Bug Bounty Program

– Mitchell Amador on Crypto Bug Bounties, Web3 Security and Immunefi

– Bitget Restricts Use of AI Tools Following Troubling Results and Negative User Experiences

– Microsoft’s Brad Smith Calls for More Regulation of Artificial Intelligence